According to the WHATWG blog, future versions of the HTML specification will no longer use a version number. I can't imagine why this is a good idea. How are web designers supposed to know how to target their sites to visitors? A "living and breathing spec" will require frequent updates from every browser vendor, so as to stay current with what's allowed. As new features are implemented, how will developers know who's compliant and who's not? It seems to me that removing the version is a big step backwards. Perhaps they have ways of handling these situations?

HTML Losing Its Version Number

Jan 22, 2011Jury Duty

Sep 26, 2010Earlier this week, I had the opportunity to serve on a jury for the first time. The experience lasted for three full days and I learned a lot about how the process works. Now that the case is closed and I can openly discuss it, I figured I'd write up a little bit about my experience. I'll go through each day's proceedings, the case itself, and the outcome.

Day 1: Wednesday

Court is held in the Durham County Judicial Building, located in downtown Durham. The building itself is fairly old and could really stand to be replaced, something that Durham is currently working on (the new building is currently under construction). Jurors are asked to report the first day at 8:30 AM which, as it turns out, is really when the building opens to the public. Going through security to enter the building was a bother. Men have to remove their belts, and everyone must empty their pockets and pass through a metal detector. All bags are also x-rayed. I lost my pocket knife in the process, as you aren't allowed to take "weapons" into the building. I could have taken my knife back to my car, but it was a good three to four block walk back to the juror parking deck, so I just decided to simply toss it.

Upon reaching the jury pool room on the fifth floor, I checked in and took a seat. Although I didn't count, there were apparently over 100 potential jurors (numbers 1 through 160 had been called to report that day). After everyone was checked in, we all watched a short video about the process of a judicial case. The jury clerk, a very nice lady, then came out and gave us further information and instruction. After that was complete, we sat. And sat. And sat some more. Our lunch break was quite long that day (12:15 to 2:30), which allowed me to kill some time. The day ends at 5:00, so at 4:00 we all figured we were going to get through the day without being called. At 4:10, that all changed. All the jurors were called into the superior court room to be seen by a judge. The judge apologized for the delay (he had been held up by a previous case), and asked us all the report at 9:30 the next morning to be selected for a case involving assault on a female. Before letting us go, he asked if anyone had any reason for which they should be excused. It was interesting to see which reasons he allowed and which he denied. One individual was a convicted felon, which got him off the hook immediately. Two people were excused for either being a current or former corrections officer. Other folks tried to be excused, but the judge only allowed a few to go (most of the excuses were denied). He then released us all for the rest of the day.

Day 2: Thursday

I decided to show up a littler earlier than requested, just to err on the side of caution, so I came back to the courthouse at 8:45 the next morning. After going through security again, those of us from the previous day were seated in a sectioned-off area of the jury pool room. Around 9:45 or so, we were all called back to the court room from the previous day. After being seated, the judge explained to us what the case concerned. We were to be trying a criminal case in which a male was accused of assault on a female. The state was prosecuting the case, as the victim had either chosen not to appear, or was unable to (it was never made clear why she wasn't present).

The court clerk called 12 names, mine being one of those 12. We proceeded one by one to the jury box, and were then questioned by the attorney representing the state. After each being asked a number of questions, the state attorney decided to excuse three jurors. Three more names were called, and those folks were asked the same questions. Once the state was satisfied, the defense attorney then questioned each of us. He was dissatisfied with several jurors, and asked that they be removed. It's interesting to note that each side can choose to excuse 6 jurors for no reason at all. Beyond that, they must have a valid reason for which a juror may not serve. I'm not fully clear on all the rules, but it was very interesting to see how each side handled the selection process. Choosing jurors literally took most of the day, during which I got to hear the same set of questions 7 or 8 times (this got quite boring). At about 2:45 or 3:00 in the afternoon, both sides were finally pleased with the jury, and all other jurors were excused from duty. There were a total of 12 jurors plus 1 alternate.

Once all the other jurors had been excused and left the courtroom, the case started. Since the state attorney had the burden of proof, she got to go first in the opening statements. Once she had completed, the defense attorney gave his opening statement. After he was finished, the state began to offer evidence. Two witnesses were called to the stand: a person who had been in the room at the time of the incident (albeit with her back turned), and one of the responding officers. We heard testimony from both of these witnesses. I found it interesting that, because the victim was not present, no hearsay from her could be entered into evidence. This affected the police officer's testimony, since he was reading from a filed incident report. The jury was asked to step out of the court room on a number of occasions, as the lawyers wrangled with the judge over these sorts of legal matters. At the end of testimony, and after the defense attorney got a chance to cross examine the witnesses, the state essentially rested their case. The day was spent by this point, so we were asked to return at 9:30 again the next morning to hear evidence from the defense. I should point out that jurors may not discuss the case with anyone (even other jurors) while the case is ongoing. This made all of the jury breaks fairly boring, as we could only chit-chat with one another about random stuff.

Day 3: Friday

At about 9:45 or so on Friday morning, the case got underway again. The defendant took the stand and testified on his own behalf, giving his side of the story. We found out during this testimony that he had been previously charged with two offenses: a drug charge with intent to sell and distribute, and assault on a minor under the age of 12. At least one of these charges was dismissed, presumably the assault charge (he had apparently served some time for the drug charge). The judge later told us that we could only use these previous charges to weigh the truthfulness of the defendant's testimony; we could not use it to make a decision on the current charge against him. In other words, we couldn't find him guilty of assault just because he had a prior assault charge brought against him.

Once the defendant had completed his testimony, we were asked to step out of the jury room while he stepped down from the box. I also found this to be interesting. Perhaps the judge deemed it necessary since the witness stand was located on our side of the courtroom. Once we returned, the state recalled the officer to the stand as further evidence. This got thrown out, however, on some technical grounds that we weren't privy to (the jury was again asked to leave the courtroom while the lawyers argued their position). Returning once more, we got to hear closing arguments from both sides, and we were then given the case. The judge instructed us to evaluate three points in the law concerning the active charge against this individual:

- The victim was a female

- The accused was a male

- There was an intent to harm on the part of the accused

Once we were given the case, we then went to the jury room to make our decision. It was clear from testimony that an injury obviously happened (the victim's mouth had been injured to the point of bleeding), but whether or not it was intentional was debatable. The defendant claimed that it had been an accident. According to testimony from all of the witnesses, there had been no arguing beforehand, nor were there any arguments afterward. This, coupled with the fact that no witness really saw what happened, helped us lean towards a "not guilty" verdict, simply because the state attorney had failed to prove that the defendant had intended to do harm. I should point out that this defendant had driven the victim quite a long ways to Duke Hospital to have her son checked out by doctors, going well out of his way to help her. Seeing as he had been friendly enough to do that, and that he had asked about the child's well-being during an interview with police, seemed to indicate to us that there was no intention of harm on his part.

The reading of the verdict was an interesting and tense moment. After the jury was called back in, our foreman handed the verdict to a deputy, who then handed it to the judge. He looked over it, then gave it to the court clerk to read aloud. The defendant was asked to stand, and then the court clerk began to read out her boilerplate statement. She eventually got to the decision itself, the reading of which really reached a crescendo (it read something like "We the twelve members of this jury hereby find the defendant..."). I couldn't imagine being in the defendant's position, waiting for those few words that indicate whether you're free to go, or are on your way to jail. After the verdict was read, the jury was excused and our time was considered completely served. Now that I have served, I cannot be recalled for jury duty for two years.

I'm very glad I got the opportunity to serve on a jury. Although there was a fair share of boring moments, I found the experience quite educational. Plus, I get $52 for my three days served ($12 for the first day, $20 each for days 2 and 3)! This was definitely an experience I'll never forget.

Sound Corruption in Windows 7?

Sep 22, 2010Has anyone here run into sound corruption problems in Windows 7? I'm having occasional audio problems with my current system, and I'm wondering whether my Sound Blaster Audigy 2 ZS is to blame (it's an ancient card). All I need is another hardware failure...

Disliking Java

Sep 21, 2010If you were to ask me which programming language I hated, my first answer would most certainly be Lisp (short for "Lots of Stupid, Irritating Parentheses"). On the right day, my second answer might be Java. But seeing as hate is such a strong word, I'll opt for the statement that I dislike Java instead.

For the first time in probably 7 or 8 years, I'm having to write some Java code for a project at work. In all fairness, one of the main reasons I dislike the language is that I'm simply not very familiar with it. I'm sure that if I spent more time writing Java code, I might warm up to some of its quirks. But there are too many annoyances out of the gate to make me want to write stuff in Java for fun. Jumping back into Java development reminds me just how lucky I am to work with Perl and C++ code on a daily basis. Here are a few of my main gripes:

- It's a little ridiculous that the language requires the filename containing a class to exactly match the name of the class (so, a class named

MyClasshas to be placed in a file named "MyClass.java"). Other than making it easy to find where certain code resides, what's the benefit of this practice? The compiler simply translates your human-readable code into machine-specific byte code; filenames get lost in the translation! - It pains me to have to write

System.out.println("Some string");to print some text, when in Perl it's simplyprint "Some string";. This leads me to my next major gripe: - Java is way too verbose. I have to write 100 lines of code in Java to do what can be done in 10 lines of Perl. My time is worth something and I'm spending too much of it dealing with Java boilerplate code. In C++, I can use the

public:keyword once, and everything that follows is public (until either another similar control keyword is reached or we come to the end of the block). It doesn't look like that's allowed in Java. Instead, I have to place thepublickeyword in front of each and every member variable and function. Ugh! - Surprisingly, Java's documentation is pretty poor. Examples are few and far between and varying terminology makes it unclear when to use what function. For example, in some list-based data structure classes, getting a count of the items in said list might be

getSize(), it might begetLength(), it could be justlength(), or it might even begetNumberOfItems(). There's apparently no standard. Every other language manual I've ever used, be it PHP, Perl, or even the official C++ manual, has examples throughout, and relatively sane naming conventions. I can find no such help in Java-land. - Automatic memory management can be handy, but it can also be a bother. I know for a fact that there are folks out there who make competent Java programmers who wouldn't last 10 minutes with C++ code. Pointers still matter in the world of computing. That Java hides all of those concepts from programmers, especially young programmers learning the trade, seems detrimental to me. It pays to know how memory allocation works. Trusting the computer to "just handle it" for you isn't always the best solution.

- Nearly all Java IDE's make Visual Studio look like the greatest thing on the planet; and Visual Studio sucks!

All that being said, the language does have a few redeeming features. Packages are a nice way to bundle up chunks of code (I wish C++ had a similar feature). It's also nice that the language recognizes certain data types as top-level objects (strings being one; again, C++ really hurts in this department, and yes I know about STL string which has its own set of problems).

I know there are folks who read this site that make a living writing Java code, so please don't take offense at my views. It's not that I hate Java; it's just that I don't like it.

The Future of Graphics Cards?

Sep 14, 2010Having recently replaced my graphics card, I was surprised to learn that the latest generation of cards requires not one, but two PCI-E power connections (with recommended power ratings of 20A on the +12V rail). Seeing as graphics cards have gotten larger (they now take up the width of 2 or more PCI slots) and more power hungry, I got thinking about their future. Several questions came to mind:

- In 5 or 10 years, will graphics cards require their own dedicated power supply?

- Will computer manufacturers forgo the PCI-E format for some sort of on-board socket, similar to the CPU?

- If not, how will card size factor in to motherboard and case design?

It seems to me, especially seeing as how some graphics cards have cooling units larger than the card itself, that the PCI-E form factor for GPUs can't last for many more years. Perhaps smaller-scale, multiple cores will prevent them from growing even larger than they are today. It's interesting to think about the various possibilities.

Not as Bad as I Feared?

Aug 27, 2010I found an old video card around my house last night, so I swapped my current one out for it. I was able to boot my system, but upon entering Windows, I still see graphical trash. That indicates to me that the motherboard is most likely to blame.

After doing a little bit of hardware research last night, it appears that my CPU is still among the best, so I doubt I'll replace that after all. And seeing as my graphics card might not actually be to blame, I'll probably hang on to it as well (it, too, is still fairly decent). The motherboard definitely needs to be replaced, and I'm thinking about going to DDR3 memory instead of DDR2 (though if I stayed with DDR2 I could get by with just purchasing a new motherboard).

So, long story short, the situation doesn't appear to be as dire as I had initially thought. It still bites that I have to deal with this though. Why can't technology just work?

The Day the Computer Died

Aug 26, 2010My desktop computer at home has been giving me some occasional graphical problems ever since I updated to Windows 7. I have the latest and greatest drivers for my graphics card, but every so often I get graphical trash on screen that, usually, corrects itself. Tonight, it seems to have died for good. I can't get the system to boot reliably, even after trying to reseat the card. To add to my woes, I've also been having the occasional "double-beep" at startup, indicating that I have a memory problem. This has been an issue ever since I switched to the abit motherboard I'm currently using.

Anyways, I'm going to bite the bullet and buy a bunch of new hardware to fix all of this. New motherboard, CPU, memory, graphics card; the whole shebang.

If you have recommendations as to what to buy these days, I'd sure appreciate it. I'll be putting in some orders ASAP, so the sooner you can recommend something, the better.

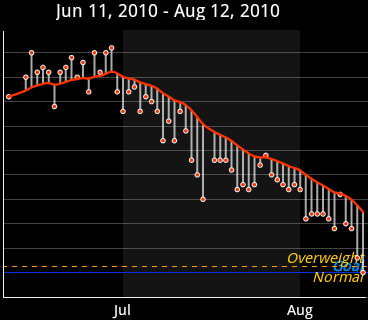

Tracking My Weight

Aug 13, 2010I've been slightly overweight for quite a long time. Two months ago, I decided I would start tracking my weight daily, in an effort to try and motivate myself to shed a few pounds. Desiring a tool to make this easy, I immediately searched the Android marketplace and found Libra. This incredibly handy tool uses a weight trend line as described in the excellent book The Hacker's Diet.

Allow me to quickly talk about The Hacker's Diet. Written by John Walker, founder of AutoDesk, this book tackles weight loss as an engineering problem. The author is funny, to the point, and provides a careful analysis of how weight loss works. The briefest summary: you will only lose weight by eating fewer calories than you need. Exercise won't do it (though it helps), and weird diets (Atkins, South Beach, et al.) won't do it either. Read the book for further discussion and analysis of this viewpoint. The author presents a pretty solid case that's hard to argue against. Best of all, the book is available for free as a PDF!

The trend line in a weight chart tells you where you're headed: am I gaining weight (line going up), maintaining it (horizontal), or losing it (line going down)? With this simple tool, I was able to see in no time at all that my weight was going upwards at an alarming rate. After waking up to my weight gain, I set a modest goal of losing 9 pounds (I was 9 pounds above the "overweight" line for someone my height).

After reading The Hacker's Diet, I made one simple change to my lifestyle: I altered how much I eat at each meal. I didn't change what I eat; only how much. And wow what a difference that has made! Today, I weighed in at my goal weight for the very first time! Here's the proof:

As you can see from the chart, I started heading up, turned the corner, and have been headed down ever since. My trend line hasn't yet hit my target weight (as of today's measurement, it's scheduled to hit the target on August 21), but at least it's heading in the right direction. It was a great feeling to hit my target this morning. I'm looking forward to shedding a few more pounds and maintaining a healthier weight.

This weekend, for my mom's birthday, we took a trip over to Greensboro, NC to visit the Greensboro Historical Museum and the Guilford Courthouse Military Park. Having never visited Greensboro proper, we didn't really know what to expect from either.

The historical museum in Greensboro is way larger than it may look from the outside. We easily spent two hours wandering through the various exhibits, some of which are tremendously large. More time could easily be spent here; the rainy weather limited our outdoor experiences (a few exhibits are outside the building). I was surprised to learn about the history of the area; a number of corporations were founded there, and several prominent events have occurred over the course of time. Best of all, the visit is absolutely free! I came away from the museum very impressed. It easily rivals the state museums in Raleigh.

Guilford county courthouse, site of a pivotal battle in the Revolutionary War, is equally as entertaining. Again, the rainy weather limited our outdoor activity at the park, but it should be noted that there are miles of hiking trails and a number of memorials around the park. The visitor center has an excellent 30-minute film describing the events of the battle. A number of artifacts from the battlefield are also on display; from rifles, to cannonballs, to belt buckles, it's all here. The collection is truly gigantic. Again, the visit is completely free. This is a park I will definitely return to.

If you're ever in the Greensboro area, I highly recommend both destinations. Both provide a relaxing environment, and a historical perspective on the Piedmont region of North Carolina.

Useful Tool: ImgBurn

Jul 20, 2010I needed a quick and easy way to burn an ISO image here at work, so I took a look around and found ImgBurn. A Windows-only app, it's small, easy to set up, and took no time at all to get working. The only annoyance was that the installer included an option to install an "Ask" toolbar in IE (along with a few other advertising options). Thankfully, you can disable them all at setup time.

Recommended LCDs?

Jul 12, 2010Exactly five years ago today, I bought a used NEC 22" monitor for my personal computer at home. It has served me well for that time, but I've seen it act up a time or two recently. Seeing as LCD technology has progressed much over the past few 5 years, I feel like it's finally time to bite the bullet and join the mainstream. As such, I'm starting the hunt for a new display. Here's what I want:

- Real Estate: I run 1600 x 1200 at home, and I'd like to stay in that neighborhood

- Fast Response Times: The display would primarily be used for gaming, so fast response times are a requirement.

- Vibrant Colors: Some LCD displays have pretty weak white-balance; I want something with nice color reproduction, since I'll also be doing occasional photo editing.

Does anyone here have any recommendations on brands or where to start looking? Is there a model or manufacturer you've been happy with? Any ideas would be appreciated!

Early Thoughts on Windows 7

Jun 21, 2010On Friday afternoon, I finally upgraded my home system to Windows 7. Windows XP was feeling dated, and my old system had slowed to a crawl for unexplained reasons. I also figured it was time to upgrade to a 64-bit OS, so that's the version of 7 that I installed. Here are a few brief thoughts I've had on this new operating system:

- New Task Bar

- Interestingly enough, the steepest learning curve I've had with Windows 7 has been with the new task bar. I'm quite used to XP's task bar, complete with the quick launch toolbar. The new task bar in Windows 7 rolls these two toolbars into one; essentially combining currently running applications with 'pinned' applications. Also, by default, only program icons are displayed; none of the window titles are shown as a part of each process' button. This new scheme is a little confusing at first, but I'm becoming accustomed to it.

- Updated Start Menu

- Microsoft finally got smart with the new start menu. No longer does it stretch to the top of the screen when you have a million applications installed. Instead, the "All Programs" menu simply transforms into a scrollable pane, showing the items available. This is a terrific UI change that should have been done at least 10 years ago.

- Improved Speed

- In the midst of going to Windows 7, I also made several hardware improvements. I upped my memory from 2 GB to 4 GB (I may go to 8 GB if 4 doesn't suffice), I am using a new brand of hard drive (Western Digital, instead of Seagate), and I added a new CPU heat sink. Since I updated a few hardware components, I'm not sure what really made the difference, but most of my applications now start noticeably faster than before. For example, iTunes starts nearly instantly, which blows the previous 15 to 20 second startup time out of the water. Games also start way faster, which is a plus. I love getting performance boosts like this; hopefully they will hold up over time.

- Miscellaneous

- There are other minor things that I find interesting about the Windows 7 experience:

- Installation was amazingly fast, and I was only asked one or two questions.

- Drivers thankfully haven't been an issue (so far).

- The built-in zip file support has apparently been vastly improved; it's orders of magnitude faster than XP. I'm not sure I'm going to install WinZip seeing as the built-in support is so good.

- The new virtualized volume control is epic; why wasn't it like this all along?

So far, I'm pleasantly surprised with Windows 7. Some of the new UI takes getting used to, but this looks like a positive step forward; both for Microsoft and for my home setup.

E3 2010

Jun 18, 2010This year's E3 has come and gone, and I thought I'd post a few thoughts on various things introduced at the event. To make things easy, I'll organize things by platform.

PC Gaming

- Portal 2

- This may be the game I'm most excited about. Whereas the first Portal was an "experiment" of sorts, this second title looks to be a full-fledged game. The puzzles sound much more insidious (physics paint!), and the new milieu of the game looks incredible. Portions of the trailer I watched are very funny, as can be expected. And hey, it's Valve we're talking about here. This will definitely be a winner.

- Rage

- id Software's new intellectual property looks incredible. Part racer, part first-person shooter, this game looks like a boat load of fun. It's pretty, too, as expected with titles from id (humans still look a little too fake, however; they need to drop the 'bloated' look). I'll probably pick this one up when it's released.

- Deus Ex: Human Revolution

- If this game is as fun (and as deep) as the first one was, I'll definitely buy in. If it's as lame as the second one was reported to be, I'll skip it. Nevertheless, the trailer looks great.

Nintendo Wii

- Lost in Shadow

- This upcoming adventure game looks really impressive. You play as the shadow of a young boy, separated from him at the beginning of the game. The ultimate goal is to reach the top of a tower, where the boy is being held. But the twist here is that, as a shadow, you can only use other object's shadows as platforms. Manipulating light in the environment looks like a large part of the puzzle mechanic. This is another very inventive title that looks promising.

- Zelda: Skyward Sword

- What's not to like about a new Zelda title?

- Kirby's Epic Yarn

- Kirby's Epic Yarn has an incredibly unique art design. This time around, Kirby is an outline of yarn, and moves through a similarly designed environment. I've seen plenty of comments around the web poking fun at the seemingly "gay" presentation of the trailer; but this looks like an inventive, fun game to me.

- Donkey Kong Country Returns

- I was a big fan of the Donkey Kong Country games back on the SNES, so I'm really looking forward to this one. Some of the older games were ridiculously difficult; hopefully some of that difficulty will be ported over. The graphics in this one look fantastic.

- Epic Mickey

- Mickey Mouse goes on an epic adventure, using various paints and paint thinners to modify and navigate the world. The fact that this game includes a Steamboat Willie level, complete with the old artwork style, is epic in itself.

Nintendo DS

- Nintendo 3DS

- The next iteration of Nintendo's hand-held looks interesting. I'd have to see the 3D effect in person to get a good feel for it, but all the press I've read has sounded promising. There are some neat sounding titles coming for this new platform and, if they're fun enough, I may just have to upgrade.

XBox 360

- Kinect (AKA Project Natal)

- I'm not exactly sure what to think about this. I've read in several places that Microsoft really butchered the unveiling of this tech, opting for 'family-friendly' titles similar to what's already on the Wii. That being said, Child of Eden looks like a phenomenal title that makes terrific use of the new technology. Only time will tell how this stuff works out. I think it's funny, however, that Sony and Microsoft are just now trying to catch up to Nintendo in motion control. Nintendo gets a lot of hate from the hard-core gaming community (a small portion of which is justified), but they're obviously doing something right; otherwise these companies wouldn't be entering this space.

I'm sure there are a few items I've missed in this rundown, but these are the ones that really caught my eye. For those of you who followed this year's event, what are you looking forward to?

Two Towers and Return of the King

Jun 7, 2010Yesterday, I finally finished reading the Lord of the Rings series for the first time. I can finally scratch them off my list of shame! As I did for the previous two books, I thought I would provide some brief thoughts on each.

The Two Towers

I found it interesting how this volume told two stories in separate chunks (books 3 and 4), rather than interleaving them. The first book follows the adventures of Aragorn, Gimli, Legolas, Merry, Pippin, and Gandalf, from beginning to end. The second follows Sam, Frodo, and Gollum. In the movie adaptation of this book, the stories are intertwined, helping to remind the viewer that various events are happening in parallel. Telling each story in its entirety in the novel was much more rewarding from a reading perspective. I never lost track of what was going on during each story, and I found them that much more engaging. It's interesting that Peter Jackson decided to move the scene with Shelob into the third movie, since it really happens at the end of the second novel. Again, this was a top notch novel, which I enjoyed cover to cover.

The Return of the King

To me, this book differs more from its movie adaptation than the previous two. In the book, the army of the dead is used to gain ships for Aragorn and company: nothing more. They are released from service after helping the company obtain these ships. In the movie, the dead travel with them and fight Sauron's army with the company. I think I prefer the novel's version here. Likewise, I prefer the ending of the novel over the movie. How could the film's writers have left out the scouring of the Shire? When Frodo and company return to the Shire, they find it in ruin. This was a key scene omitted from the movie, much to the movie's detriment, in my opinion. Novel for the win!

Now for a few final thoughts on the series as a whole:

- It boggles my mind that Arwen is a bit character in the novels. Having seen the movies before reading the books, I guess my vision of her importance was tarnished. She barely has any speaking lines in the books, and is left out of the second story altogether.

- While I enjoy Peter Jackson's movie adaptations of these books, the novels (as usual) far exceed them. Key elements were left out of the films: interacting with Tom Bombadil, several scenes with the Ents, and the scouring of the Shire (along with the deaths of both Saruman and Wormtongue). I guess it's hard to beat a book.

Defining State Parks

Jun 4, 2010While researching the North Carolina State Park System for my "visit and photograph every state park" project, I learned that there are far more state parks than I realized. My original list had 39 parks; the official list, as I eventually found on the NC parks website, lists 32 parks, 19 natural areas, and 4 recreation areas. Unfortunately, this list is only current as of January 1, 2007. As such, a few newer parks aren't listed, such as Grandfather Mountain and Chimney Rock (which is actually listed as Hickory Nut Gorge).

All of this got me thinking about what, for my purposes, constitutes a "state park." Not all of the official sites have public facilities or access. A number of the state natural areas are simply chunks of land set aside for preservation. Several areas are relatively new and haven't yet been developed. Some others aren't developed simply based on recent budget cuts and shortfalls.

These facts have all led me to the following decision: the "state parks" I will pursue in my visitation project will include those for which official attendance figures are kept. Attendance information is posted in each state park newsletter; it is from this source that I have pulled my park list. The result is 40 parks, which nearly agrees with my first list. I had omitted Grandfather Mountain in my first pass, simply because it only recently became a state park, and wasn't listed on the official website until very recently.

I'm looking forward to visiting each park in the state. As of this writing, I've been to 13 parks, and have photographed 11. Plenty more to go!

Useful Tool: Process Explorer

May 13, 2010I cannot recommend Process Explorer highly enough. This application from SysInternals is essentially a replacement for the built-in Windows task manager. One small feature that turns out to be pretty useful is that each process is shown in the list with its associated icon. This makes tracking down a specific application really easy (especially those troublesome processes that don't terminate cleanly; Java, I'm looking at you). The other tremendously useful feature I enjoy is having a description and company name along with each process. Many processes have cryptic, 8-character names, and having the associated information to help identify them is a real time saver.

Automatic Dependency Generation

May 7, 2010As I mentioned a while back, I've been wanting to discuss automatic dependency generation using GNU make and GNU gcc. This is something I just recently figured out, thanks to two helpful articles on the web. The following is a discussion of how it works. I'll be going through this material quickly, and I'll be doing as little hand-holding as possible, so hang on tight.

Let's start by looking at the final makefile:

SHELL = /bin/bash

ifndef BC

BC=debug

endif

CC = g++

CFLAGS = -Wall

DEFINES = -DMY_SYMBOL

INCPATH = -I../some/path

ifeq($(BC),debug)

CFLAGS += -g3

else

CFLAGS += -O2

endif

DEPDIR=$(BC)/deps

OBJDIR=$(BC)/objs

# Build a list of the object files to create, based on the .cpps we find

OTMP = $(patsubst %.cpp,%.o,$(wildcard *.cpp))

# Build the final list of objects

OBJS = $(patsubst %,$(OBJDIR)/%,$(OTMP))

# Build a list of dependency files

DEPS = $(patsubst %.o,$(DEPDIR)/%.d,$(OTMP))

all: init $(OBJS)

$(CC) -o My_Executable $(OBJS)

init:

mkdir -p $(DEPDIR)

mkdir -p $(OBJDIR)

# Pull in dependency info for our objects

-include $(DEPS)

# Compile and generate dependency info

# 1. Compile the .cpp file

# 2. Generate dependency information, explicitly specifying the target name

# 3. The final three lines do a little bit of sed magic. The following

# sub-items all correspond to the single sed command below:

# a. sed: Strip the target (everything before the colon)

# b. sed: Remove any continuation backslashes

# c. fmt -1: List words one per line

# d. sed: Strip leading spaces

# e. sed: Add trailing colons

$(OBJDIR)/%.o : %.cpp

$(CC) $(DEFINES) $(CFLAGS) $(INCPATH) -o $@ -c $<

$(CC) -MM -MT $(OBJDIR)/$*.o $(DEFINES) $(CFLAGS) $(INCPATH) \

$*.cpp > $(DEPDIR)/$*.d

@cp -f $(DEPDIR)/$*.d $(DEPDIR)/$*.d.tmp

@sed -e 's/.*://' -e 's/\\\\$$//' < $(DEPDIR)/$*.d.tmp | fmt -1 | \

sed -e 's/^ *//' -e 's/$$/:/' >> $(DEPDIR)/$*.d

@rm -f $(DEPDIR)/$*.d.tmp

clean:

rm -fr debug/*

rm -fr release/*

Let's blast through the first 20 lines of code real quick, seeing as this is all boring stuff. We first set our working shell to bash, which happens to be the shell I prefer (if you don't specify this, the shell defaults to 'sh'). Next, if the user didn't specify the BC environment variable (short for "Build Configuration"), we default it to a value of 'debug.' This is how I gate my build types in the real world; I pass it in as an environment variable. There are probably nicer ways of doing this, but I like the flexibility that an environment variable gives me. Next, we set up a bunch of common build variables (CC, CFLAGS, etc.), and we do some build configuration specific setup. Finally, we set our DEPDIR (dependency directory) and OBJDIR (object directory) variables. These will allow us to store our dependency and object files in separate locations, leaving our source directory nice and clean.

Now we come to some code that I discussed in my last programming grab bag:

# Build a list of the object files to create, based on the .cpps we find

OTMP = $(patsubst %.cpp,%.o,$(wildcard *.cpp))

# Build the final list of objects

OBJS = $(patsubst %,$(OBJDIR)/%,$(OTMP))

# Build a list of dependency files

DEPS = $(patsubst %.o,$(DEPDIR)/%.d,$(OTMP))

The OTMP variable is assigned a list of file names ending with the .o extension, all based on the .cpp files we found in the current directory. So, if our directory contained three files (a.cpp, b.cpp, c.cpp), the value of OTMP would end up being: a.o b.o c.o.

The OBJS variable modifies this list of object files, sticking the OBJDIR value on the front of each, resulting in our "final list" of object files. We do the same thing for DEPDIR, instead prepending the DEPDIR value to each entry (giving us our final list of dependency files).

Next up is our first target, the all target. It depends on the init target (which is responsible for making sure that the DEPDIR and OBJDIR directories exist), as well as our list of object files that we created moments ago. The command in this target will link together the objects to form an executable, after all the objects have been built. The next line is very important:

# Pull in dependency info for our objects

-include $(DEPS)

This line tells make to include all of our dependency files. The minus sign at the front says, "if one of these files doesn't exist, don't complain about it." After all, if the dependency file doesn't exist, neither does the object file, so we'll be recreating both anyway. Let's take a quick look at one of the dependency files to see what they look like, and to understand the help they'll provide us:

objs/myfile.o: myfile.cpp myfile.h

myfile.cpp:

myfile.h:

In this example, our object file depends on two files: myfile.cpp and myfile.h. Note that, after the dependency list, each file is listed by itself as a rule with no dependencies. We do this to exploit a subtle feature of make:

If a rule has no prerequisites or commands, and the target of the rule is a nonexistent file, then make imagines this target to have been updated whenever its rule is run. This implies that all targets depending on this one will always have their commands run.

This feature will help us avoid the dreaded "no rule to make target" error, which is especially helpful if a file gets renamed during development. No longer will you have to make clean in order to pick up those kinds of changes; the dependency files will help make do that work for you!

Back in our makefile, the next giant block is where all the magic happens:

# Compile and generate dependency info

# 1. Compile the .cpp file

# 2. Generate dependency information, explicitly specifying the target name

# 3. The final three lines do a little bit of sed magic. The following

# sub-items all correspond to the single sed command below:

# a. sed: Strip the target (everything before the colon)

# b. sed: Remove any continuation backslashes

# c. fmt -1: List words one per line

# d. sed: Strip leading spaces

# e. sed: Add trailing colons

$(OBJDIR)/%.o : %.cpp

$(CC) $(DEFINES) $(CFLAGS) $(INCPATH) -o $@ -c $<

$(CC) -MM -MT $(OBJDIR)/$*.o $(DEFINES) $(CFLAGS) $(INCPATH) \

$*.cpp > $(DEPDIR)/$*.d

@cp -f $(DEPDIR)/$*.d $(DEPDIR)/$*.d.tmp

@sed -e 's/.*://' -e 's/\\\\$$//' < $(DEPDIR)/$*.d.tmp | fmt -1 | \

sed -e 's/^ *//' -e 's/$$/:/' >> $(DEPDIR)/$*.d

@rm -f $(DEPDIR)/$*.d.tmp

This block of code is commented, but I'll quickly rehash what's going on. The first command actually compiles the object file, while the second command generates the dependency file. We then use some sed magic to create the special rules in each dependency file.

Though it's a lot to take in, these makefile tricks are handy to have in your toolbox. Letting make handle the dependency generation for you will save you a ton of time in the long run. It also helps when you're working with very large projects, as I do at work.

If you have a comment or question about this article, feel free to comment.

Oatmeal Raisin Cookies

Apr 29, 2010This recipe comes from Quaker Oats (from the lid on their oatmeal containers, specifically). I've transcribed it here so I can remember it without having to keep an oatmeal lid lying around somewhere. Note that the cookie recipe on their website is slightly different from this one. These are incredibly delicious cookies!

- 1/2 pound of margarine or butter, softened

- 1 cup firmly packed brown sugar

- 1/2 cup granulated sugar

- 2 eggs

- 1 teaspoon vanilla

- 1-1/2 cups all-purpose flour

- 1 teaspoon baking soda

- 1 teaspoon cinnamon

- 1/2 teaspoon salt (optional)

- 3 cups oats, uncooked

- 1 cup raisins

Beat together the margarine and sugars until creamy. Add eggs and vanilla, and beat well. Add combined flour, baking soda, cinnamon and salt; mix well. Stir in oats, and raisins; mix well. Drop by rounded teaspoonfuls onto ungreased cookie sheet. Bake at 350 degrees for 10 to 12 minutes or until golden brown. Cool 1 minute on cookie sheet; remove to wire rack. Makes about 4 dozen.

Hover Inspection in Firebug

Apr 16, 2010One of the recent updates to Firebug broke a "feature" I used all the time: the ability to select a link with the element inspector, then edit that link's :hover pseudo-class style rules. Well, it turns out that technically, that "feature" was a bug (though I might argue against that fact). In newer versions of Firebug, you have to:

- Click the element inspector

- Click the link you're interested in editing

- Select the

:hoverpseudo-class menu item in the Style tab's drop-down menu - Edit the rule as you like

This new option allows you to "lock" the element in the :hover state, the usefulness of which I can understand. At the same time, it would be great to have an option (perhaps a hidden preference) to bring back the old behavior.

Plugging CSS History Leaks in Firefox

Apr 14, 2010Yesterday's nightly Firefox build fixed bug 147777, in which the :visited CSS property allows web sites to detect which sites you have visited (essentially a privacy hole). Sid Stamm wrote a very interesting article on the problem, along with how the Firefox team decided to fix the issue. I recall being amazed at the simplicity of this privacy attack: no JavaScript was needed for a site to figure out where you had been. Several history sniffing websites are available if you're interested in seeing the hole in action.