The latest Smarter Every Day video is on tractor pulls and the science behind them. I grew up watching these on TV (alongside monster truck rallies), and I was aware of the basics. It turns out, however, that there's a lot more going on here than meets the eye. This was a really entertaining and enlightening watch; check it out!

Tractor Pulls

Aug 28, 2022Sudoku Solver

Aug 4, 2022I've really gotten into sudoku recently. I'm not sure what prompted this, but I've been playing through the New York Times' puzzles, which offer an easy, medium, and hard variation daily. My favorite feature of sudoku is that you don't have to guess randomly to make progress. As a logic puzzle, all of the information you need to solve it is there on the board in front of you. This makes parsing through that logic a fun challenge.

With practice, I've improved my chances of solving these puzzles. I can now solve both the easy and medium puzzles without any assistance or hints. The hard puzzles, however, have been a higher hurdle to clear. I get stuck on the hard puzzles pretty often, getting to a point where I run out of strategies to employ.

While reading up on various advanced strategies, I happened upon the Sudoku Solver by Andrew Stuart. This web application allows you to set up the game board, along with what you know so far, and then allow it to walk through the solution step by step. It's this latter feature that is so amazing to me. You can watch, step by step, which strategies get employed to break through whatever wall you're currently facing. I've used it a few times now to help me learn new strategies (naked pairs and hidden pairs being the newest ones I've learned). I'm still no expert, but this helpful little tool is helping me learn the ins and outs of how these games are typically solved.

On Human Curation

Jul 27, 2022A recent article at The Verge entitled Adam Mosseri confirms it: Instagram is over got me thinking about content curation. One of the article's arguments revolves around how "the algorithm" is partially to blame for Instagram's slow demise. I'm not an Instagram user, but I do use YouTube, which has similar problems. The home page of YouTube is skewed by what "the algorithm" thinks I want to see. Most of the time, it's surprisingly bad at predicting what I might be interested in. One of my major gripes is that it often suggests things I've already watched.

What I'd love is for more platforms to offer human curation. Something along the lines of kottke.org (which I happened to be a late-comer to; kottke.org is currently on hiatus). I claim that human curated content, done correctly, would outperform any algorithmic means currently employed.

Neat Video on Electric Train Power Lines

Jul 3, 2022YouTube recommended the following video to me tonight. It answers a number of questions I've always had about how the power lines for electric trains are structured:

- How is the contact wire kept straight?

- How does it deal with temperature variations?

- Why do the electric lines have so many components?

The animations in this video make it all clear. It's well worth the short watch if you're curious about this stuff like I am.

Live Flight Radar

Jun 26, 2022Recently, while sitting out on my back deck with the kids, I wondered if it was possible to identify the planes flying over my house. (We live near the Raleigh-Durham international airport, which means there are always planes visible). I asked Brave if this was possible, and found out that it was!

The Flightradar24 website allows you to view flight paths of planes in real time, which is so neat. They have an associated app, which I downloaded to my phone. I can now see a plane, pull up the app, and identify the flight (where it's coming from, where it's going to, etc.). The app shows big commercial flights, as well as smaller private flights. Helicopters are also displayed. What a neat world!

Home Renovation Series

Jun 12, 2022Matt Cremona, one of YouTube's best woodworkers, is having his house renovated. He's filming the entire process, and is up to 37 episodes as of this writing (check out the full playlist). He claims that there will be well over 100 episodes in total!

I cannot recommend this series highly enough; it's what I wish shows like This Old House were like. He covers the detail of every stage, showing how they tackle the problems they encounter (some of which are doozies!). I'll link the first video in the series below. This series is a slow burn, but it's well worth the watch.

Words of the 19th Century

May 24, 2022I've recently been rereading the collection of Sherlock Holmes stories by Arthur Conan Doyle. These stories are among my favorites, though it's been quite some time since I read them last. One of the fun things about reading these classic stories has been discovering words that I am unfamiliar with. As I have read, I've been keeping a log of these puzzling words, though I only started logging about one third of the way through the first volume. There are likely several words I've left out as a result.

Here are the esoteric words I've come across so far. See how many you know:

- vesta (noun): A short match with a shank of wax-coated threads

- distrait (adjective): apprehensively divided or withdrawn in attention; distracted

- wideawake (noun): a soft felt hat with a low crown and a wide brim

- presentiment (noun): a feeling that something will or is about to happen

- St. Vitus' dance (noun): a movement disorder marked by involuntary spasmodic movements especially of the limbs and facial muscles and typically symptomatic of neurological dysfunction;

- meretricious (adjective): tawdrily and falsely attractive; also, superficially significant

- atavism (noun): recurrence in an organism of a trait or character typical of an ancestral form and usually due to genetic recombination

- chevy (verb): to chase; run after

- betokened (verb): to give evidence of

- asperity (noun): roughness of manner or of temper : harshness of behavior or speech that expresses bitterness or anger

- inanition (noun): the exhausted condition that results from lack of food and water

As I go through the second volume (which I have yet to start), I'll keep a similar log and may make a second post with additional words from the past.

Weather Forecasting is Weird

May 23, 2022Here's an enjoyable video from the always terrific Atomic Frontier YouTube channel on the history of weather forecasting, and how we do it today. I learned some stuff I didn't know!

Brave Search

May 18, 2022For the past few weeks, I've been giving Brave Search a good college try. I've been doing this in an effort to reduce my dependency on Google, as well as to reduce my exposure to their advertising and profiling mechanisms. So far, I've been pleasantly surprised. The majority of my searches are of a technical nature (usually in regards to my work), and the search results generally have exactly what I'm looking for. Their recently added Discussions feature has been particularly helpful to find results from sites like Stack Overflow and Reddit.

It isn't perfect, however; a few recent searches on some esoteric technical topics (7-zip performance in Ubuntu, for example) left me mildly disappointed. However, I've been pleased enough that I think I'll start using this as my go-to search engine. It works great in the Brave browser, which I am also now using as my primary driver.

Python's namedtuple is Great

May 10, 2022I don't use the namedtuple often in Python, but every time I do, I ask myself, "Why aren't I using this more often?" Today I ran into a case where it made total sense to use it.

I'm loading data from a database into a dictionary, so that I can later use this data to seed additional tables. To keep things nice and flat, I use a tuple as the key into the dictionary:

ModelKey = namedtuple('ModelKey', 'org role location offset')

model_data = {}

for x in models.DataModelEntry.objects.filter(data_model=themodel):

key = ModelKey(x.org, x.role, x.location, x.offset)

model_data.setdefault(key, x.value)

Later, when I use this data, I can use the field names directly, without having to remember in which slot I stored what parameter:

to_create = []

for key, value in model_data.items():

obj = models.Resource(org=key.org, role=key.role, location=key.location,

offset=key.offset, value=value)

to_create.append(obj)

The first line in the loop is so much clearer than the following:

obj = models.Resource(org=key[0], role=key[1], location=key[2], offset=key[3], value=value)

Using the field names also makes debugging easier for future you!

Trimming Doors

Apr 24, 2022Earlier this week we had new carpets installed in our house. The new carpet is much thicker than the old one, and is remarkably soft underfoot. Unfortunately, this new thickness resulted in a number of doors dragging. Not only did this make opening and closing some of the doors difficult, but it wasn't doing any favors for our heating and air conditioning units. It turns out that door gaps are fairly important from an HVAC perspective.

I decided to try my hand at trimming these doors myself, given that I have the tools. It turned out that this process was fairly simple, and I got great results. Here are some photos showing how I went about doing this:

There were a few things I learned through the process that I wish I had known before I started:

- The strip of melamine I used was too narrow. A few times, my clamps got in the way and resulted in a bulge or two that I had to sand out.

- Using a strip of painter's tape along the straightedge is key. I made the mistake of omitting this on the first door I did, and the saw's foot marred the door.

- I tried using a gap of only 3/8 of an inch on one door, but that ended up being too short. A 1/2 inch gap is much better.

This was a fairly easy project to do, though it was somewhat time consuming. It's nice, however, to have it completed. And I did it myself!

NC Zoo's Aviary to Close

Apr 22, 2022What devastating news! The North Carolina Zoo will permanently shutter its aviary, by far the best attraction! I've taken many photos over the years in the aviary, as it was one of the most photogenic places at the zoo (and, one could argue, in all of North Carolina). I get that the building is likely in bad shape, but can't we set up a GoFundMe or something to build a replacement? What a terrible loss.

Good Old Music - Vol. 4

Apr 13, 2022I've only recently discovered the music of Rush, a band which I've previously overlooked. Geddy Lee's voice takes some getting used to, but the musicianship of this band is astounding. I'm really enjoying hearing their albums for the first time.

Hemispheres is my favorite album of theirs that I've heard so far. The highlight track is La Villa Strangiato, an instrumental piece that clocks in at over nine and a half minutes.

The entire album can be heard on YouTube, and is included below. Check it out!

Minor Site Tweaks

Mar 30, 2022This shouldn't affect too many viewers to this site, but I've tweaked the stylesheets (and markup) slightly to improve the reading experience on mobile devices. If you spot anything that's obviously broken, let me know.

Django REST Framework is Too Abstract

Mar 29, 2022Django REST Framework (DRF) is, on the surface, a neat piece of software. It provides web interactivity (for free!) to your REST interfaces, and can make generating those interfaces pretty quick to do. It has baked in authentication and permission handling. With just a few lines of code you're up and running. Or are you?

As you dig deeper into their tutorial, you'll find that this framework is abstraction layer on top of abstraction layer. Using naked Django style views, I could easily write a listing routine for a specific model in my application. Let's take an example model (all code in this post will omit imports, for brevity):

class Person(models.Model):

email = models.CharField(max_length=60, unique=True)

first_name = models.CharField(max_length=60)

last_name = models.CharField(max_length=60)

display_name = models.CharField(blank=True, max_length=120)

manager = models.ForeignKey('self', related_name='direct_reports', on_delete=models.CASCADE)

def __str__(self):

return (self.display_name if self.display_name

else f"{self.first_name} {self.last_name}")

This model is simply a few key pieces of data on a person inside my application. A simple view to get the list of people known to my application might look like this:

class PersonList(View):

def get(self, request):

people = []

for x in Person.objects.select_related('manager').all():

obj = {

'email': x.email,

'first_name': x.first_name,

'last_name': x.last_name,

'display_name': str(x),

'manager': str(x.manager),

}

people.append(obj)

return JsonResponse({'people': people})

This, I would argue, is simple and easy to read. It may be a little verbose for me, the programmer, I admit. But if another programmer comes along behind me, they're fairly likely to understand exactly what's going on here; especially if they are a junior programmer. Maintenance of this code therefore becomes trivial.

Let's now look at a DRF example. First I need a serializer:

class PersonSerializer(serializers.ModelSerializer):

class Meta:

model = Person

fields = '__all__'

depth = 1

This looks good, but it doesn't handle the display_name case correctly, because I want the str() method output for that field, not the field value itself. The same goes for the manager field. So I now have to write some field getters for both. Here's the updated serializer code:

class PersonSerializer(serializers.ModelSerializer):

display_name = serializers.SerializerMethodField()

manager = serializers.SerializerMethodField()

class Meta:

model = Person

fields = '__all__'

depth = 1

def get_display_name(self, obj):

return str(obj)

def get_manager(self, obj):

return str(obj.manager)

Once my serializer is complete, I still need to set up the view that will be used to actually load the list:

class PersonList(generics.ListAPIView):

queryset = Person.objects.select_related('manager').all()

serializer_class = PersonSerializer

I'll admit, this is pretty lean code. You cannot convince me, however, that it's more maintainable. The junior programmer is going to come in and look at this and wonder:

- Why do only two fields in the serializer have get routines?

- What even is a SerializerMethodField?

- Why is the

depthvalue set on this serializer? - What does the ListAPIView actually return?

- Can I inject additional ancillary data into the response if necessary? If so, how?

DRF feels like it was designed by Java programmers (does anyone else get that vibe?). REST interfaces always have weird edge cases, and I'd much rather handle them in what I would consider the more pythonic way: simple, readble, naked views. After all, according to the Zen of Python:

- Simple is better than complex.

- Readability counts.

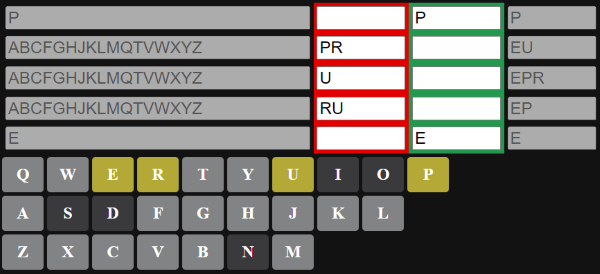

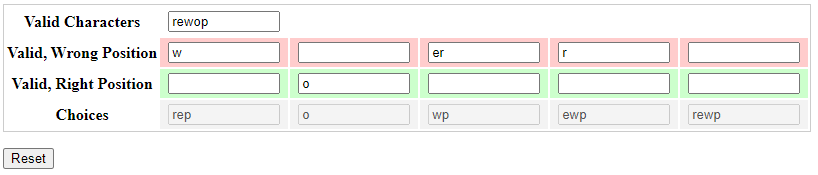

Wordle Helper 2

Mar 23, 2022My previous Wordle helper has now been supplanted by a newer variant, which adds a number of nice new features. Here's a screenshot of the new UI:

The top five rows represent the slots for each character of the five-letter word. Each row consists of four controls:

- The left-most column displays the possible remaining choices for that position.

- The column with a red background is where you specify letters that are in the word, but not in the given position.

- The column with a green background is where you specify letters that are in the word and are in the given position.

- The final column shows the subset of possibilities using only the letters known to be in the word.

As long as the body of the page has focus, you may simply type letters to remove them from play. Holding shift while typing a letter will add that letter to the pool of known letters. Holding the control key will remove the letter from the pool (if you make a mistake). Be careful with this, however; some browser shortcuts cannot reliably be trapped (ctrl + w being one of them).

As an alternative to typing, you can click the on-screen keyboard to remove letters, and Shift + click letters to add them. Here's a second screenshot showing the tool while in use:

Date Nut Balls

Mar 13, 2022This family favorite is a staple of the holiday season. It's one of my all-time favorite snacks, and you truly cannot eat just one!

- 1 stick butter (or margarine)

- 1 cup light brown sugar

- 8 oz. package of dates, cut fine

- 4 oz. flaky coconut

- 1 cup chopped nuts (peanuts or walnuts)

- 2 cups Rice Krispies

- Powdered sugar

Combine the butter, brown sugar, dates, and coconut in a heavy saucepan. Start a timer when you put it on the stove and cook for 6 minutes. Do not overcook!

After the timer sounds, add the chopped nuts and the Rice Krispies, mix well, and let it cool. Shape the mixture into balls, and roll in powdered sugar while still warm.

Making Stick Butter Spreadable

Mar 5, 2022Where possible, my family tries to reduce the amount of plastic we consume. One example of this is our choosing not to purchase tubs of spreadable butter, a food staple we eat plenty of. Instead, we convert sticks of butter (which come in paper containers) into spreadable butter. The recipe we use to do this is shown below. It's an easy way to prevent extra plastics from entering the waste stream.

- Two sticks unsalted butter (room temperature)

- 1/2 tsp salt

- 2/3 cup oil

Bring the sticks of butter to room temperature by leaving them out overnight. Place the butter into a bowl, along with the salt and oil of your choosing. We use avocado oil, as it has a pretty neutral taste. Olive oil is also a good choice, but be aware that olive oil can have a fairly overpowering taste.

Mix the ingredients well with a hand-mixer. Pour the contents into a container (we reuse old, plastic butter tubs) and refrigerate. Two sticks of butter fit nicely into a 15-ounce butter container.

Sublime Text Packages I Use

Feb 27, 2022I love Sublime Text and use it across a number of my computers. Occasionally, one of my systems becomes down-level, especially in terms of the packages I'm using. I know I can sync my user profiles via Git (or something similar), but occasionally it's good to reset things and start from a fresh profile.

So that I can keep track of what I primarily use, I'm writing down the packages I use on my primary development system.

Last Updated: December 23, 2024

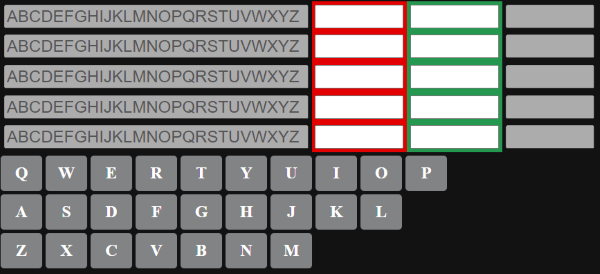

Wordle Helper

Feb 23, 2022I've been enjoying Wordle, despite the transition to The New York Times (I expect the game to disappear behind the paywall sometime in the near future). Some of the variants of Wordle have also been enjoyable, my favorite among them being Quordle.

One strategy I've used as I've played over the past few weeks is to use a text-editor to keep track of the letters I know are valid, along with their positions. This aids me in figuring out potential words to guess, and others to rule out. Being the programmer that I am, I turned this manual process into a tiny, self-contained web application:

You enter the characters you know are present in the word in the top box. As you learn about the positions of each character, you also fill in that information. In the example shown above, the word I'm trying to guess is "power." I know the o is in the second position, and I know the w is not in the first position. The resulting choices set at the bottom of each column helps me figure out what it might be.

It was fun to put together this tiny little script.